The cloud has been one of the most hyped concepts in media technology over the past decade. For years, vendors, consultants, and keynote speakers at trade shows have urged broadcasters to “move to the cloud” as though it were a single, monolithic destination. Words like “cloud-native”, and “cloud-first” have been used liberally, often without any clear definition and were just the same software running in a cloud container rather than a local host machine. The promise seemed irresistible, infinite scalability, rapid innovation, and lower costs. Yet as the industry has matured, the reality has been far more complex. Cloud has certainly transformed parts of the media workflow, but not all of it, and not in the same way for every organisation. What has become clear is that there is no single cloud model that fits every use case. Instead of asking whether to use the cloud, the real question is which type of cloud to use, how it should be deployed, and why. Cloud at its essence is a remote machine that you connect to and run a service. It can be either owned by your organisation (private), run as a paid providers service (public) or be a mixture of both (hybrid).

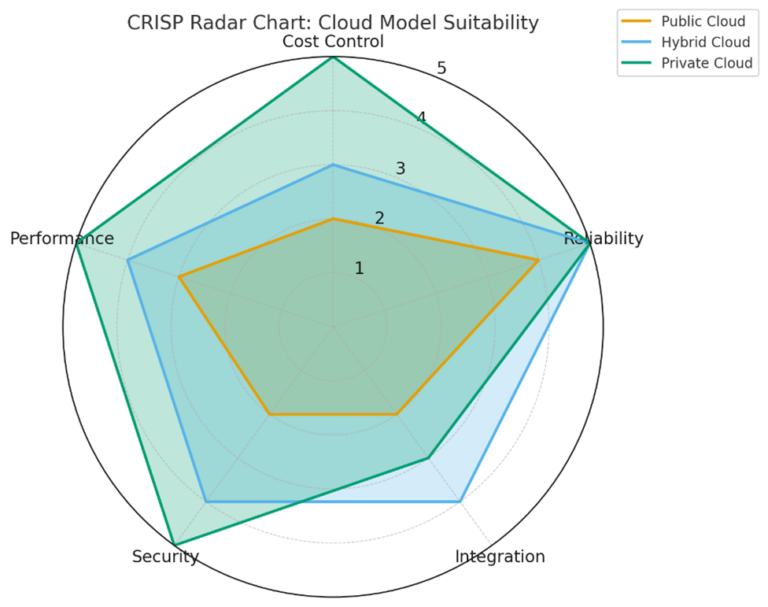

At GlobalM we have lived through this evolution first hand. Working with leading sports federations, broadcasters, and news agencies, we have built and deployed protocol native IP transport infrastructure across the world. What we see every day is that the choice between public, private, and hybrid cloud is not a binary one. It is a layered, strategic decision that touches on Cost, Reliability, Integration, Security, Compliance, and Performance (CRISP). The CRISP Framework is a structured approach to making cloud choices that is based on operational reality rather than marketing slogans.

Much of the confusion in the industry comes from the fact that not all vendors mean the same thing when they say “cloud.” Public cloud refers to hyperscaler platforms such as AWS, Azure, and Google Cloud, with virtually unlimited compute power and global presence. For media companies, public cloud has been transformative for certain types of work like AI driven metadata extraction, large scale VOD encoding, archive storage, and compliance recording. The ability to spin up capacity on demand is powerful, but it comes with hidden costs. Data egress fees have caught many by surprise, eating into margins and undermining the supposed savings. But public cloud is a service and therefore is a OPEX based cost which in some countries is an advantage for accounting purposes.

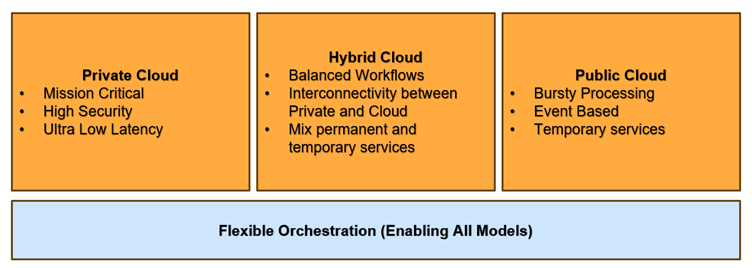

Private cloud sits at the opposite end of the spectrum. It usually takes the form of dedicated infrastructure in a managed facility or on-premises data centre, under the full control of the operator. For broadcasters who need guaranteed performance, absolute security, or compliance with strict regulation, private cloud is unmatched. Tier 1 sports federations, broadcasters, and news outlets often rely on this model for mission critical ingest and ultra-low latency playout. The drawback is that it requires significant upfront CAPEX investment and does not offer the elasticity of hyperscaler resources like in a public cloud.

Hybrid cloud has emerged as the middle ground. The concept is to use public resources for elasticity where it makes sense, and private resources for guaranteed performance, with orchestration managing the movement between them. When done well, hybrid strategies provide resilience and efficiency. A live sports broadcaster might use private encoders at a stadium, route signals via a hybrid orchestration layer, and deliver to multiple public endpoints. Done poorly, hybrid becomes the worst of both worlds, creating more cost and complexity without real benefit. These are not just technical distinctions, they represent strategic choices that shape budgets, determine levels of vendor lock-in, and influence long-term operational agility.

Workflows also don’t move “up and down” the cloud like traditional IT traffic. Media tends to flow horizontally, east to east, from venue to distribution hub, from ingest point to playout, or from contributor to receiver. It is therefore more accurate to think of cloud choices as zones along a spectrum, where different parts of the workflow may sit in different places depending on their requirements.

The CRISP Framework is designed to help navigate these choices. These are the five dimensions that consistently matter in broadcast and media operations. Cost control is about predictability. For workflows with heavy data transfer, egress fees can rapidly wipe out the expected savings, which makes private infrastructure more stable, while public cloud offers flexibility but with volatility. Reliability concerns uptime guarantees and disaster recovery; hybrid and private models tend to score higher here, as public availability zones can and do fail. Integration is critical in an industry where legacy systems and regulatory obligations are ever-present. Hybrid is often most adaptable, while public can be more difficult to align with entrenched workflows. Security and compliance need little explanation, private infrastructure provides the tightest control, while public requires trusting the hyperscalers. Performance is the most obvious factor, and for ultra-low-latency applications, private remains the gold standard. Public is well suited to batch workloads, while hybrid balances both extremes.

These five factors provide a way to compare cloud models on equal terms. The results show a clear pattern: each model has strengths and weaknesses, and no single approach fits all.

What becomes clear from this analysis is that there is no universal winner. Each cloud model performs differently depending on the workload. Public delivers flexibility but at the cost of control. Hybrid excels in contribution and disaster recovery, but only if supported by orchestration. Private remains the most reliable and secure, but with less elasticity and higher capital cost.

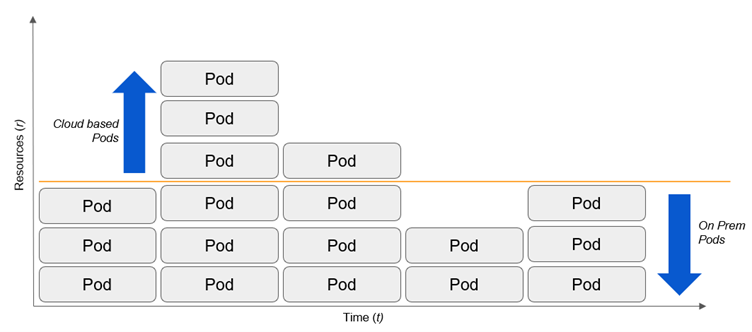

The way these choices play out is best seen through real-world examples. A lower tier sports customer, for instance, only requires significant capacity on weekends when matches are scheduled. During the week, demand is minimal or non-existent. For them, a public model makes sense, where cloud resources handle the workflow only when it is needed.

By contrast, a network operator has a very different requirement. Their services run 24 hours a day, seven days a week, supporting permanent transport streams across multiple regions. For this type of customer, private cloud or dedicated infrastructure provides the most reliable and predictable solution. The priority is not elasticity but stability, security, and guaranteed performance for continuous delivery.

A news organisation faces yet another challenge. Their daily workloads can be handled reliably by on-premises infrastructure, but breaking news events demand instant scalability. In those moments, cloud bursting provides additional capacity, ensuring that feeds can be distributed globally without interruption. This mix of permanent local resources with cloud expansion at peak times delivers both resilience and efficiency.

What all these examples make clear is that cloud models only become truly effective when orchestrated. Infrastructure alone, whether public, private, or hybrid, does not solve the problem. Without orchestration, resources remain siloed and underutilised. With orchestration, organisations can make decisions dynamically, matching workloads to infrastructure in real time. GlobalM’s GMX1 Core and GMDC API provide exactly this layer, offering real-time routing, auto-scaling of resources, region-aware handoffs, and end-to-end monitoring. This enables broadcasters to take advantage of whichever cloud model is most appropriate in the moment, without being locked into one approach. In practice, orchestration is the invisible layer that turns cloud from a marketing term into an operational advantage.

Looking ahead, regulation and sustainability will add further pressure to these decisions. Governments are tightening rules on where sensitive media data can be stored and who controls it. Broadcasters are facing growing expectations to reduce energy consumption and carbon emissions. Private infrastructure may offer compliance and predictability, while hyperscalers are investing in renewable powered data centres. Hybrid may strike the right balance, but only if supported by orchestration that ensures cost, performance, and sustainability goals are all met simultaneously. Cloud strategy, in other words, cannot be static. It must be continually revisited as conditions evolve.

The industry needs to move beyond hype driven evangelism. It is no longer enough to declare that an organisation is “moving to the cloud.” The real challenge is to decide which cloud, how much of it, and for which parts of the workflow. The CRISP Framework provides a rational, structured way to have that conversation, giving engineers and executives a common language for assessing risk, cost, and performance. Real world deployments prove that the right answer is always contextual, workload specific, and orchestrated.

Fit for purpose choices will always outperform trend driven ones. With the right orchestration layer in place, broadcasters and rights holders can embrace the flexibility of public, private, and hybrid cloud on their own terms, and remain firmly in control of their media destiny.