Latency has real-world consequences far beyond the data centre. In video contribution and distribution, latency, the delay between capturing a signal and displaying it, can be the difference between success and failure, between winning and losing, between life and death. From live sports to medical surgery, low latency isn’t just a technical ideal, it’s a necessity.

What is Latency and Why It Matters

At its core, latency refers to the time it takes for a video signal to travel from the source say, a camera on the sidelines of a football match to the viewer’s screen. This delay creeps in during several stages. The encoder compressing the video, the network transporting it across the globe, and the decoder decompressing it again on the other end. Add buffering and queuing into the mix as well as any potential transcoding, and you’ve got a chain of potential delays that can add up fast.

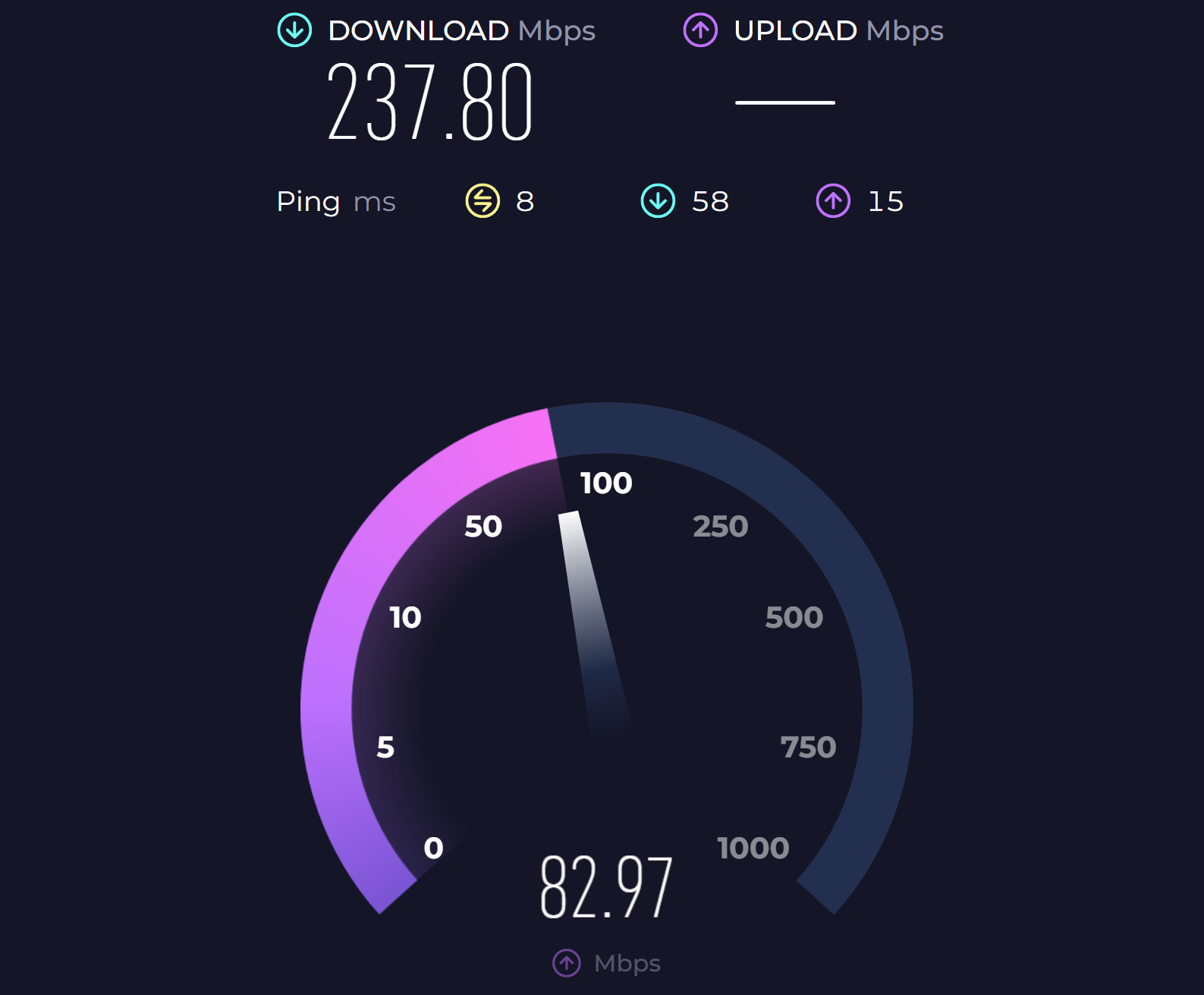

Latency doesn’t exist in isolation. It’s deeply tied to the characteristics of the network and encoder/decoder being used. When latency is reduced, throughput, the rate at which data can be successfully transmitted, increases. It’s a simple but often overlooked fact. Higher throughput allows more stable, high-bitrate video transport, particularly vital for broadcasters moving megabits per second of high-resolution footage. Networks become more predictable, which matters a great deal when you’re trying to ensure consistent performance for contribution and distribution feeds.

Latency is also affected by technical aspects such as packet loss and jitter. Lost packets must be retransmitted in the case of ARQ, adding delay, while jitter, variation in packet arrival times, can disrupt smooth playback. Reducing both is essential in building a predictable transmission environment, particularly when you’re dealing with mission-critical or interactive applications.

Real-World Stakes: Where Latency Hits Hard

Let’s talk about live sports betting. There’s a reason this industry invests so heavily in low-latency infrastructure. In the 1990s, seasoned punters could take advantage of broadcast delay by being physically present at horse racing tracks. Armed with newly available mobile phones, they’d wait until the top of the straight, see who was making a break, and place a bet before the odds had time to react. The delay (several seconds) gave them an unfair advantage. Fast forward to today, and the industry has had to adapt. With in-play betting, milliseconds matter. The integrity of the market depends on everyone receiving the same information at virtually the same time. Any perceptible delay introduces a window for exploitation.

It’s not just about the video either. Betting platforms rely on real-time statistical data, from player tracking to ball speed and any delay in that data reaching the user impacts the flow of bets, the confidence in odds, and ultimately the platform’s revenue. That data pipeline needs to be as close to real-time as the video feed itself, or the system starts to fall apart.

Another arena where latency becomes tangible is in two-way communication. Live crosses to field reporters, interviews with multiple participants, and panel shows all rely on natural conversational rhythm. When that rhythm is broken by delay, it becomes jarring. The ITU outlines that one-way transmission times should ideally be less than 150 milliseconds, making the recommended round-trip delay no more than 300 milliseconds for acceptable voice latency1 and once you exceed that, conversations begin to be difficult to manage. People talk over each other, react too slowly, or become visibly confused. It’s a dynamic we’ve all seen on television when there’s a satellite delay, awkward pauses and missed cues.

Place that into a newsroom where agility and timing are everything. Imagine trying to host a live debate with contributors in different locations, each one waiting an extra half-second before they hear the host. It becomes unworkable. Low latency, in these cases, isn’t a luxury feature, it’s a basic requirement for editorial coherence.

The stakes get even higher when we look at telemedicine. In remote surgical procedures, where one surgeon may be guiding another in real time, any delay in video can have immediate, physical consequences. Latency introduces hesitation. And hesitation, in medicine, can lead to catastrophic outcomes. The medical world is increasingly turning to real-time video for consultation, collaboration, and even live operations. In such high-pressure environments, video feeds must be instantaneous and accurate to the millisecond.

Of course, these aren’t the only use cases that demand ultra-low latency. In live musical collaborations across geographies, musicians require perfectly synced audio and video to perform together. Any noticeable delay can throw off timing and ruin a performance. Similarly, virtual reality (VR) and augmented reality (AR) applications are almost entirely dependent on low latency to avoid inducing motion sickness and to preserve the illusion of immersion. A few hundred milliseconds of delay between a user’s action and the system’s response can break the experience completely.

In professional e-sports, low latency is built into the infrastructure from the ground up. Competitive players demand not just high frame rates but instant responsiveness. Delays mean missed opportunities, or worse, lost matches. Military and defence applications also rely on real-time video like drone piloting or surveillance feeds. Decision makers depend on what they see, and they can’t afford to see it late.

Building a Low Latency Pipeline

So, how do we get to low latency? It’s a multi-pronged approach. First, the choice of codec matters. Some modern codecs, like JPEG XS running in private leased line networks, are designed specifically for low-latency applications, offering visually lossless compression with almost imperceptible delay, but this is a costly approach and used mainly in tier 1 applications.

H.265 (HEVC) can also be tuned for low-latency performance, although it’s often a balancing act between achieving compression efficiency and keeping the latency to a minimum.

That said, the most widely used codec in the world, MPEG-4 / H.264 remains a critical part of the equation. While it wasn’t originally optimised for ultra-low latency, it can be engineered to deliver impressively low delay if configured correctly. The key lies in adjusting the GOP (Group of Pictures) structure. A shorter GOP means fewer predicted frames and faster decoder response times. You also need to minimise or avoid B-frames, which increases video quality but require future frames to be decoded first, introducing inherent latency. Enabling ‘low delay’ or low latency’ modes in the encoder settings further reduces buffering and processing time, especially when using hardware encoders or tightly tuned software stacks. It’s not just about choosing the right codec, it’s about configuring it precisely for the use case.

Transport protocol is key. Traditional streaming protocols like RTMP were never designed for real-time use, and while they’ve served the industry well, they fall short when milliseconds count. In contrast, newer protocols such as SRT and RIST were built from the ground up with real-time performance in mind. These protocols offer faster connection establishment, robust handling of jitter and packet loss, and adaptive bitrate functionality that helps maintain stability in fluctuating network conditions as well as provide a Quality of Service (QoS) that normally is only achievable with a private leased line network.

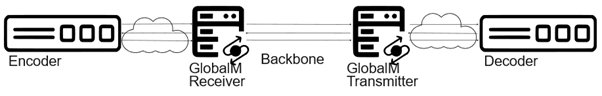

This is where a distributed architecture really begins to stand apart. While a typical SRT deployment connects encoder to decoder directly or via a static gateway, packet loss anywhere along that path means the decoder must send a negative acknowledgement (NAK) and wait for the encoder to retransmit the missing data. To account for this round-trip exchange, broadcasters often have to configure latency buffers up to four times the round-trip time, drastically increasing overall latency, particularly over longer geographic distances.

GlobalM rethinks this model entirely. We use a stateless, distributed gateway architecture that breaks the transmission path into smaller, isolated retransmission domains. If a packet is lost, it only needs to be retransmitted from the nearest stream pod, typically the edge of the network and not all the way from the source encoder. This compartmentalised approach not only reduces end-to-end latency but also makes network behaviour more predictable and resilient to loss.

And because the architecture is stateless and cloud-native, GlobalM scales effortlessly. Unlike standard approaches where every new taker adds strain to the venue’s outgoing bandwidth, GlobalM duplicates streams within the network. This means you can scale to dozens or hundreds of takers without touching the venue’s internet connection at all.

Third, the physical topology of the network makes a difference. GlobalM leverages edge computing by design, bringing content as close to the source and destination as possible. This architectural decision reduces unnecessary hops across the public internet and dramatically shortens packet paths, which means fewer opportunities for loss and delay.

In parallel, our network management strategy prioritises QoS at every level. By isolating retransmission domains and optimising routing, we make sure that video packets are not only prioritised but also handled intelligently across the entire distribution chain. What’s more, our network measures round-trip times (RTTs) between each segment at the start of a transmission. This information allows GlobalM to preconfigure SRT link buffers to the lowest safe latency before content begins flowing. Since the SRT protocol does not support dynamic buffer resizing mid-stream, knowing the RTTs in advance ensures that each segment starts optimised from the outset, avoiding the typical overprovisioning of latency buffers and delivering better performance from the very first frame.

The result is a video contribution and distribution network that operates with clockwork precision even at the scale of breaking news or major global sports events.

All of this requires careful engineering and design. It means treating latency not as a vague technical concept, but as a concrete performance metric, something to be measured, monitored, and improved. It’s about asking hard questions such as how quick is my encoder decoder latency? How efficient is my protocol at dealing with jitter? Is my network introducing unnecessary latency? If you’re in the business of live contribution and distribution, these are not theoretical questions, they’re daily concerns.

Conclusion: Time is the Product

At its core, latency is not just a technical measure, it’s a practical constraint that shapes how live video is delivered, experienced, and relied upon. Whether you’re enabling a bet to be placed in real time, facilitating a seamless on-air conversation, or transmitting life-critical images during a medical procedure, what you’re really delivering is timing. And that timing needs to be spot on.

There are also critical use cases we haven’t even touched in this article. Remote production workflows is a whole different article that depend heavily on responsive video links, not just for monitoring, but for control. When a CCU (Camera Control Unit) operator is racking from a control room hundreds or thousands of kilometres away, every millisecond of delay makes the job harder. A high-latency connection means overshooting adjustments, delayed feedback, and ultimately degraded picture quality. The same applies to tally systems, intercoms, return video feeds, and remote replay servers, all of which must operate in tight synchrony to maintain production standards.

In the broadcast world, delays aren’t just inconvenient, they’re disruptive. High latency erodes trust, damages workflows, and undercuts viewer expectations. On the flip side, a low-latency network enables faster decisions, more accurate reporting, and more interactive storytelling.

At GlobalM, we’ve engineered our entire architecture around this principle. Because in live video, time isn’t a luxury, it’s the product. And it’s our job to deliver it without compromise.